How to Recognize AI-Generated Pictures, Videos, and Audio

We actively work with experts in various fields to add value to our articles. This time, the Social Links Center of Excellence team has invited a co-author with vast cybersecurity knowledge to collaborate on an article, CyberDetective. For anyone unfamiliar, CyberDetective has been actively working to spread OSINT know-how and cybersecurity education for a long time, so we’re thrilled to have them collaborate with us.

In this article, we look at the Internet’s most recent obsession: AI-generated content. Specifically, how to recognize when a piece of media has been tampered with. For convenience, we divided the article into three sections. Each part deals with a specific type of content—images, videos, and audio. In each, we cover

So, now let us give the word to CyberDetective.

In recent years, fact-checking has transitioned from a challenging task to a tough job. The main reason for this is the emergence of numerous free AI tools for generating images, video, and audio.

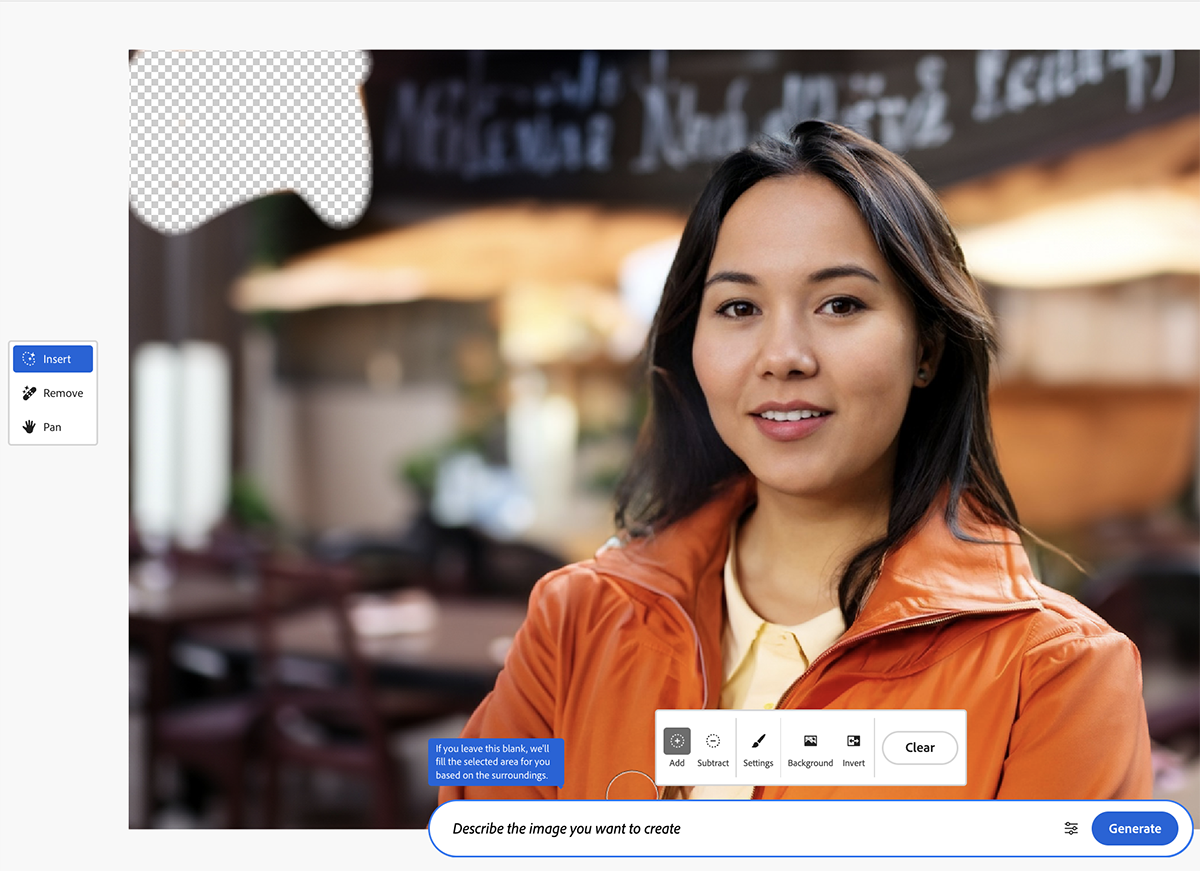

When Photoshop appeared in 1990, it already had massive photo editing and tampering potential. But now, the software comes with Adobe Firefly, a generative AI that allows users to do tasks that used to require an hour of work by an experienced professional in just five seconds. Today, all that's needed is to select an area in an image and describe the necessary changes in writing.

A similar scenario plays out with audio and video content. While programs allowing the manipulation of someone else's words have existed for decades, AI-driven tools have accelerated the creation of fakes by several folds.

Today, OSINT specialists face many tampered images, videos, and audio during investigations. And they are often fakes of relatively high quality. Below, you’ll find practical details and ways to help you recognize such forgeries faster. Unfortunately, no methods can guarantee a 100% success rate.

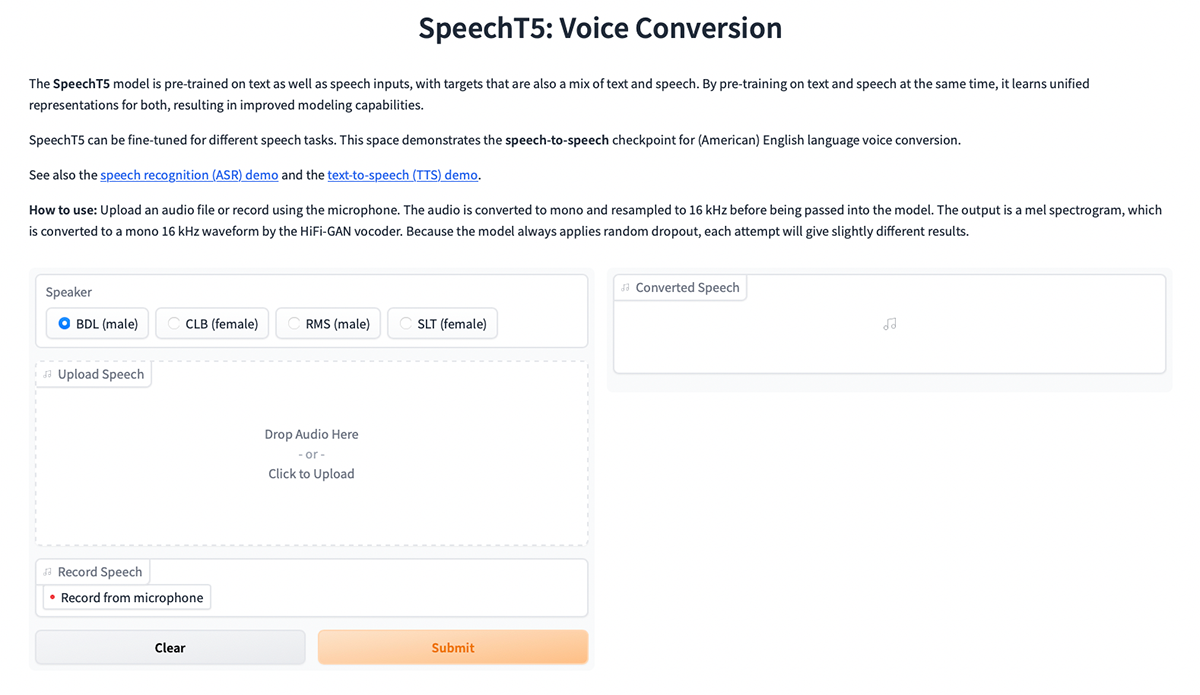

There are various online tools to recognize AI-generated pictures automatically. Solutions like AI or Not, ContentScale AI Image Detector, and AI Image Detector can do a decent job of identifying tampered images. However, these sites are not entirely reliable, especially when AI only partially edited the picture.

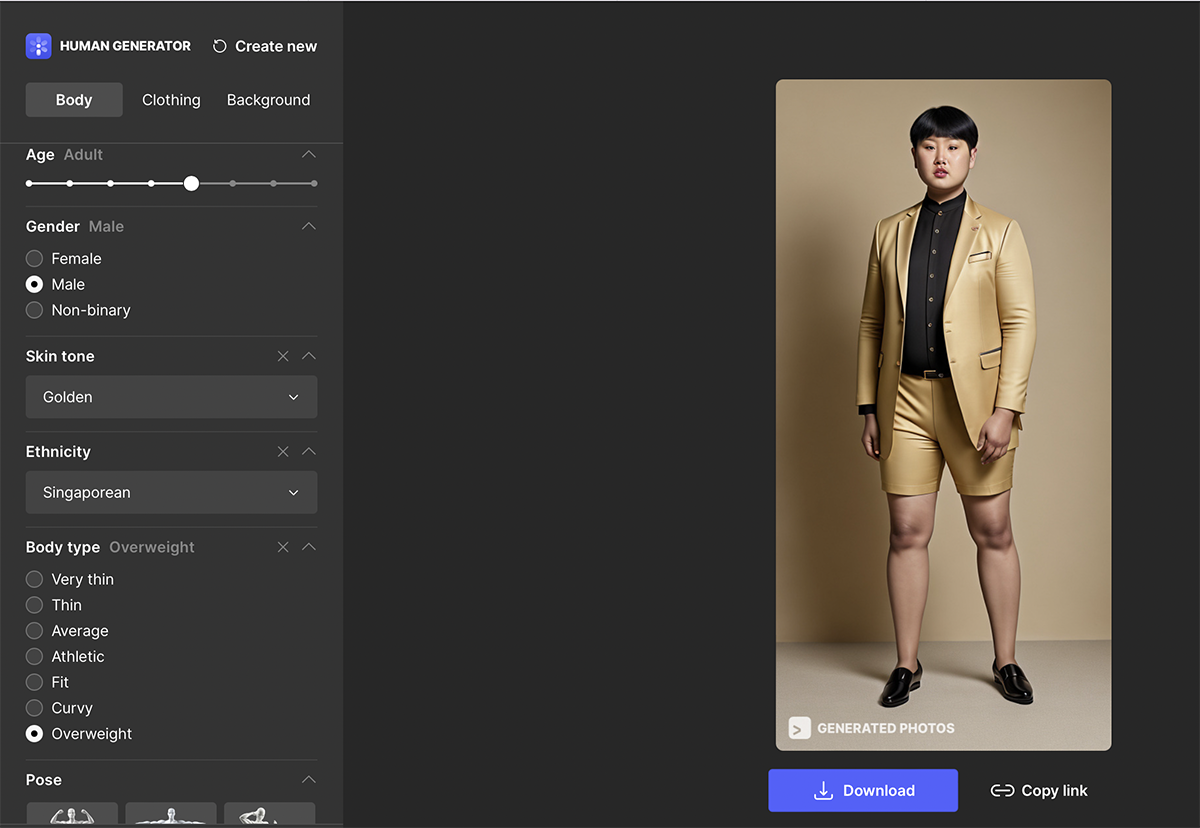

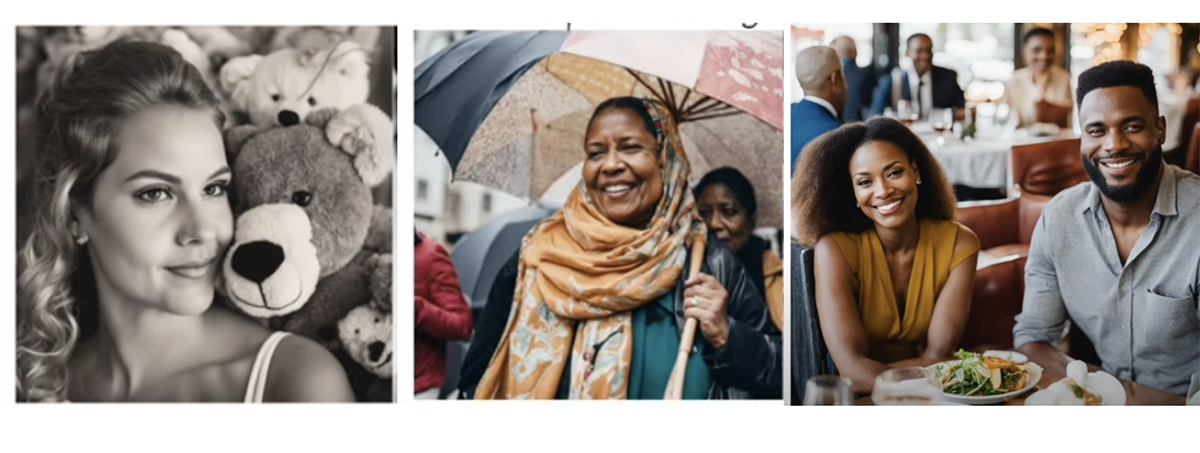

Easily detectable AI-generated photos often exhibit specific characteristics that appear unnatural to the human eye. Focusing on the following aspects is crucial:

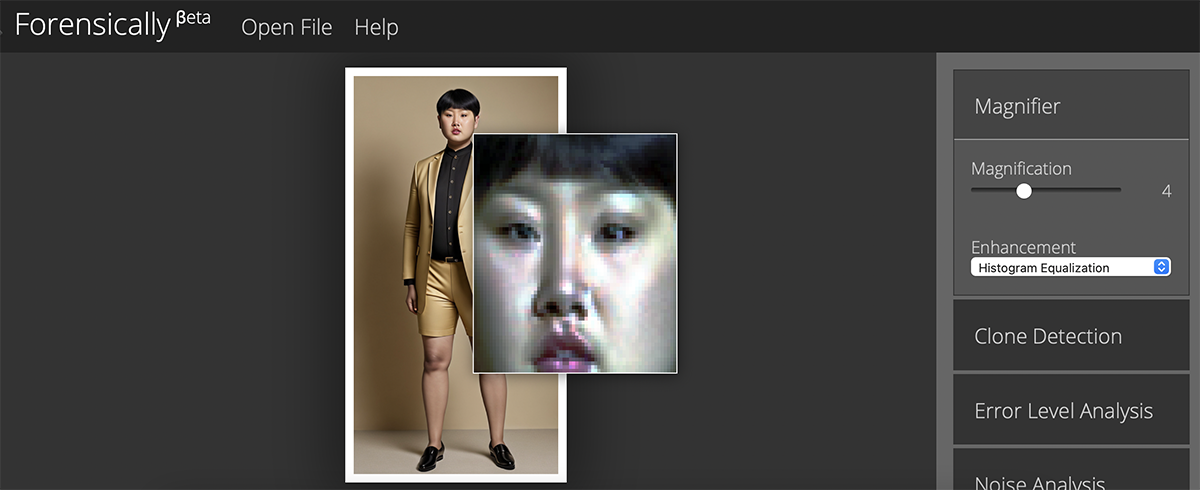

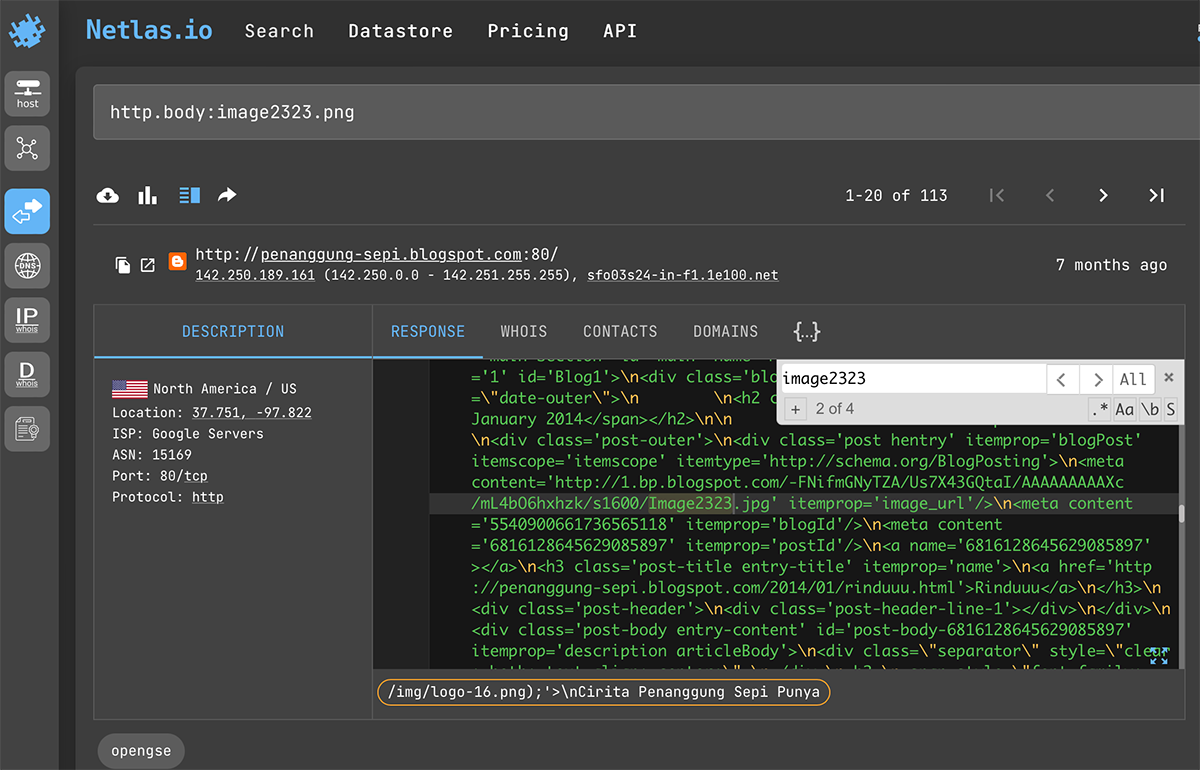

However, seasoned investigators naturally pay attention to the listed details. The real challenge is figuring out when neural networks only partially tampered with an image. In such cases, the same tools used for identifying standard photomontages can provide a way forward:

It's important to understand that high-quality fakes can be exceptionally challenging to detect. For instance, if AI tools, like those from Bria Labs, were employed to generate PSD files, each layer may have been meticulously refined. Consequently, it's not advisable to declare a picture authentic based only on visual inspection results alone.

Much like images, there are specialized tools for identifying AI-generated audio, such as AI Voice Detector and Voice Classifier for Detecting AI Voices. However, these tools are still evolving and less effective than human ears. Most people can readily detect unnatural aspects in AI-generated speech.

This unnaturalness becomes particularly evident when you listen to audio at very fast or slow speeds. You can adjust the playback speed in most audio players or use an online tool like the Time Stretch Audio Player. Additionally, you can open the recording in any sound editor to inspect the appearance of sound waves using tools like AudioMass.

If the voice is AI-generated, the sound waves are likely to be very clear and similar to each other because AI sound generation is essentially a sound drawing process.

Audio recordings created by a live person tend to sound more natural and varied.

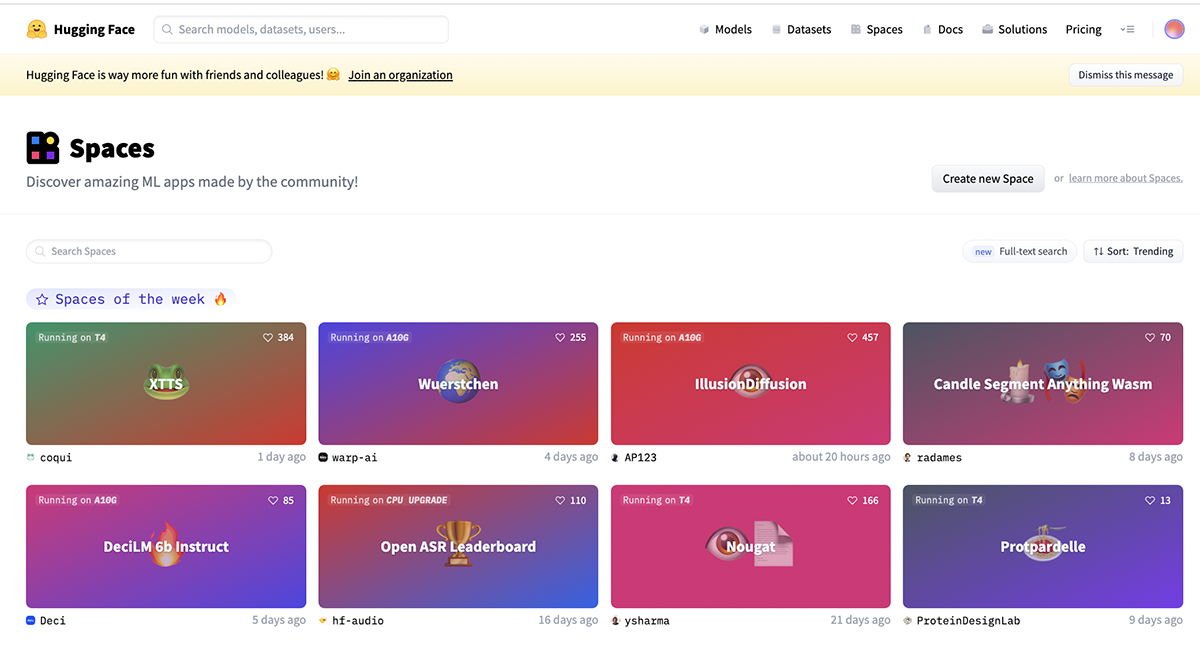

Note: Online demos, like those available on Hugging Face, are configured to work with short video clips. The platform does this to conserve resources. However, individuals can run these same AI models on their personal servers or computers, allowing them to generate much longer video clips.

Detecting Deepfake videos, which replace one person's face with another, can be done using online tools like Deepfake Detector and Deepware Deepfake Video Scanner. However, these tools have limitations and may not detect other types of video fakes, such as alterations to uniforms or backgrounds. Therefore, meticulous manual examination is the most effective way to identify fake videos. Pay close attention to unnatural or poorly executed details in the video, as discussed in the image section.

Tools like Anilyzer can help you analyze YouTube or Vimeo videos frame by frame, and you can also download videos and view individual frames using VLC player. Converting video files to image sequences with Clvideo allows for frame-by-frame exploration using the same image analysis tools mentioned earlier.

In recognizing AI-generated and edited media files, your level of attention to small details is crucial. To become proficient in identifying AI-generated content, consider exploring more examples of what modern neural networks can accomplish. Running the services from the first subsection of each section and observing their output will help you develop an intuitive sense for distinguishing AI-generated elements.

AI technology is rapidly evolving, and new tools are continually emerging. The features listed in this article may become outdated quickly. To stay informed about the latest advancements in AI, it's advisable to regularly check the Trending Spaces tabs on platforms like Hugging Face.

Please note that the landscape of AI tools is dynamic. Since the time of writing, which can be more than a month before publication, newer and improved services may have already replaced some of the tools mentioned in this article. Notably, the Hyper Human model sets new standards for generating lifelike images nearly indistinguishable from real ones. The techniques and advice provided in this article may not be effective for such advanced AI-generated content.

An impressive tool for AI-generated video is Runaway Gen-2. The solution recently received an update for its text-from-video functionality. The results are promising, with high image quality and the ability to adjust camera position, speed, and improved color reproduction. While elements still need to be improved, the end results are already lifelike. In fact, Ammaar Reshi noted the quality of the resulting work on X (formerly Twitter).

It's essential to remain vigilant, adapt to evolving technologies, and continuously enhance your skills in recognizing AI-generated content across various media types. Indeed, advancements in AI will undoubtedly extend to sound and video generation soon.