January’s OSINT News: AI for Fraud and Hacking, India’s Cyber Ambassadors, and Facial Recognition to Enforce Hijab Laws

Welcome to our first OSINT digest of the year!

With fraudsters finding ever-new ways of getting our data, it’s no surprise that cybercrime is showing an uptrend. The beginning of the year has shown us that the threat is growing, so staying informed is still the most essential tool for digital safety.

In January's digest, we give our take on AI-powered emails that sound just like the real thing, the Iranian government using facial recognition systems to enforce laws, security flaws in police apps, and much more.

It’s been quite an eventful month in cybersecurity and OSINT, so let’s dive in!

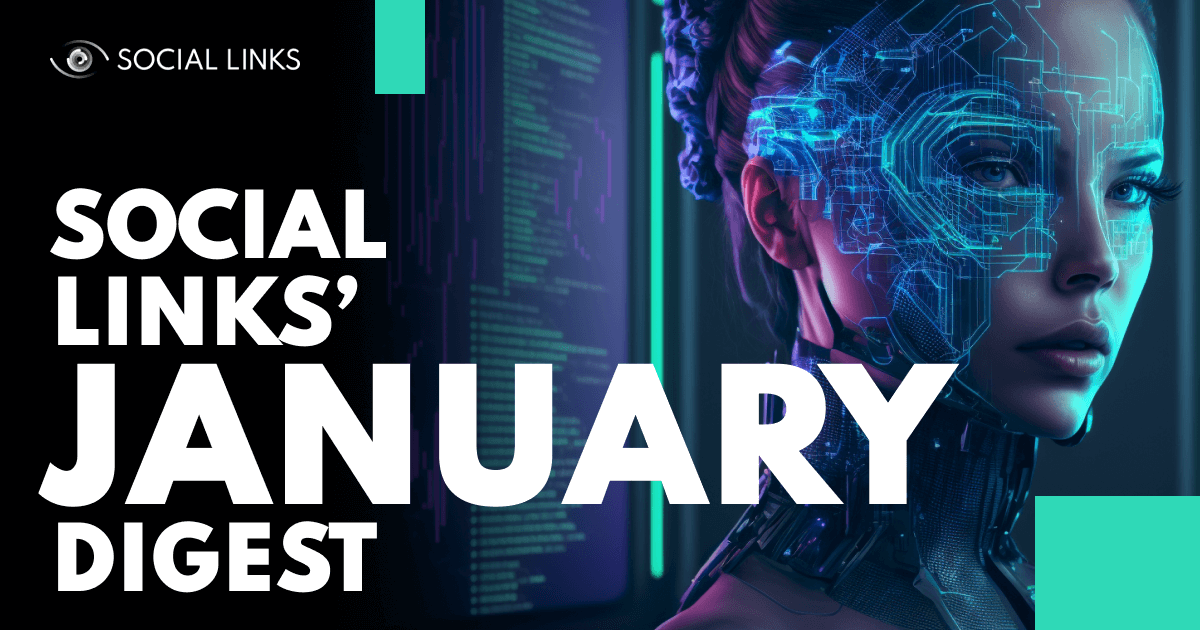

When the subject of AI-generated content comes up, a common response is: “well, I can’t see it replacing humans because it lacks the human touch.” What exactly is this elusive quality, though? Is it the ability to create something that is seen as “artistic”? Or is it something more malicious, such as criminal intent?

Designers around the world have started asking themselves whether they could be replaced with the likes of DALL-E-2, Midjourney, and Stable Diffusion. After all, these systems have been creating better designs at a much faster rate. Is writing any different? Some may answer in the affirmative, but GPT-3 and ChatGPT are churning out text that sounds pretty indistinguishable from their human-made counterparts. Whatsmore, they are perceived as sounding “more real and trustworthy.”

The thing is that we already are in the midst of an AI revolution that is, frankly, quite scary. According to a WithSecure report, GPT-3 is already being used to generate spear-phishing emails, and this seems like the beginning of a trend; not just an outlier. The Register’s take on the topic shows that these bots can create some pretty convincing fake news articles. The automation of criminal activity is very real.

The Security Affairs research team has done some hands-on experimentation on this. They wanted to see if they could use ChatGPT to hack a website. Their target was the cybersecurity training platform “Hack the Box.” The result? A resounding success. With the chatbot walking them through the process, the team completed the hack in only 45 minutes!

But it’s not all doom and gloom. A recent study shows that it is possible to train an AI algorithm to sniff out texts that were not written by humans. But what are the implications for the OSINT sphere? Well, such a model can be used by cybersecurity experts to flag up AI-generated texts and warn against potential fraud and social engineering attacks.

With AI-generated content becoming harder to distinguish for many people, new tools are required to combat augmented cybercrime. While this creates new problems to solve, it also opens up new market niches that have the potential to be lucrative business opportunities for software developers.

The world of fraud is not limited to social engineering and “Nigerian Prince” email scams. Children are still frequently targeted with malicious links to buy gems and coins for their favorite mobile games. Following the transaction, the kids are left empty-handed, and their parents lose a chunk of their bank accounts.

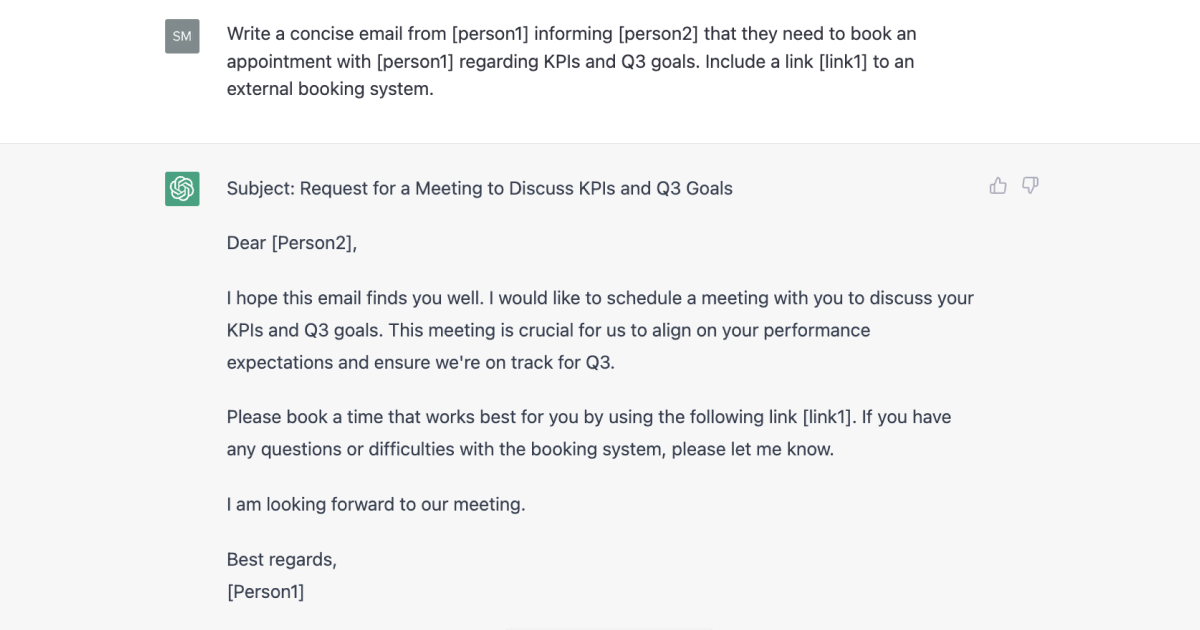

Cyberscams are big in India. With a surge of 86% since the start of the pandemic, the state of Telangana has started brainstorming to combat the issue. Their solution turned out to be the creation of the Cyber Congress. While this may sound quite sci-fi, its primary goal is to educate children on how to protect themselves against fraud online.

Enter the ‘cyber ambassadors’. These are people who have taken a 10-month-long course in digital safety and are qualified to teach the topic at schools. The graduates have already shown themselves to be effective in helping people avoid falling for scams, by teaching their relatives and friends the basics of online safety.

Of course, the number of Cyber Ambassadors is currently quite limited. There are around 3k on active duty, with a reported 10k more in training. The state of Telangana has a sizable pool of students— around 5M across 40k schools. So, it’s safe to say that the ambassadors will have their work cut out. However, every little bit counts, and as far as starts go, this seems to be a step in the right direction.

Offline grassroots efforts have proved to be effective in teaching the basics of cybersecurity to the most vulnerable demographics. To spread this know-how, governments may have to implement cybersecurity as a mandatory discipline in schools. The sooner the better. But this begs a crucial question —will education departments be ready for such innovation?

Conducting hundreds of raids at a time can be a serious strain on a law enforcement unit. So, when a handful of Californian police departments employed the LEA coordination app SweepWizard, they thought they had saved themselves some headaches. The app allowed the agencies to plan and execute a series of raids that were deemed successful.

But something was wrong. As Wired has recently revealed, SweepWizard turned out to have significant API vulnerabilities. This resulted in information on around 200 secret raids being leaked to the public. The data included the names and addresses of 5770 suspects, and 1000 social security numbers, some of which belonged to juveniles. Concerned that the leaked information might affect jury decisions, the app was shut down and an investigation has been initiated to assess the level of damage.

The current reality is that most law enforcement officers lack basic operations security (OPSEC) knowledge. Considering the accelerated rate at which criminals are becoming tech-savvy, how much longer before this gap will impact a more crucial area of operation? Immediate action should be taken, or the consequences could become dire.

The use of facial recognition in law enforcement is a hotly debated topic, as it invariably comes across as an authoritarian act. However, what if a government doesn’t mind being seen as domineering?

According to WIRED, Iran is getting ready to deploy a system for enforcing its hijab law on women. According to the country’s laws, women have been required to cover their heads in public spaces since 1979. With an infrastructure obtained from Chinese AI and camera company Tiandy, and a biometric database of face scans started in 2015, Iran is ready to enforce these laws on a new level.

You may ask why measures are becoming so draconian. The short answer is: more women are refusing to wear a hijab. Seeing it as a symbol of government oppression, the customary headwear has become an important focal point in the fight for civil liberties.

Following the death of 22-year-old Mahsa Amini in 2022, Iran has erupted with an ongoing series of protests against the state. Resulting in an estimated 19k arrests and 500 deaths, this facial recognition has already made its mark, with a significant portion of hijab-related arrests having taken place in people’s homes.

This acts as a vivid example of how data analysis and AI can be used to oppress people, rather than protect them. Likewise, OSINT can also be used either to help or oppress. Therefore, technological trendsetters need to think about the moral implications of their cybersecurity tools and use them accordingly.

Iran is setting a grim example of how high-tech, in the hands of authoritarian governments, can be used for oppressive purposes and raises questions about AI ethics and regulation. Some propose that developers should be required to embed restrictions within their AI systems to regulate potential misuse, while others argue that such restrictions would only empower the developers themselves as technical dictators. Within the current climate of discourse, Nick Bostom’s debate on AI’s meta-regulation is more relevant than ever before.

New month, new cybersecurity glossary entry. For this edition, we chose a word that we should probably become familiar with. Typing in our passwords has become a commonplace ritual for most of us. Whether it’s at the ATM, on our phones, or at our computers, many can do it by muscle memory. We of course try to do so discreetly, so nobody gets their hands on our passwords, but do we take cameras into account?

Despite the idea that images from CCTV cameras look grainy and hard to see in detail, it's not actually like that anymore. As of 2023, there are 4K resolution security cameras in action, many of which even come equipped with thermal vision.

That’s the core idea of this month’s word: Thermal attacks. In short, this means using the heat you leave on surfaces against you. Let’s go over the physics of it real fast. When you type a password at an ATM, the heat on your fingers rubs off on the keys a little bit. This is the residual heat that thermal cameras pick up.

Developed by a Glasgow University research team, the AI system ThermoSecure uses consumer-grade thermal cameras to scan heat left on surfaces. The image is then run through an algorithm that can crack passwords up to 16 symbols long based on the amount of heat on each key. With an impressive 86% accuracy rating for fresh fingerprints, and 62% for those left a minute before, the system shows how effective thermal attacks can be.

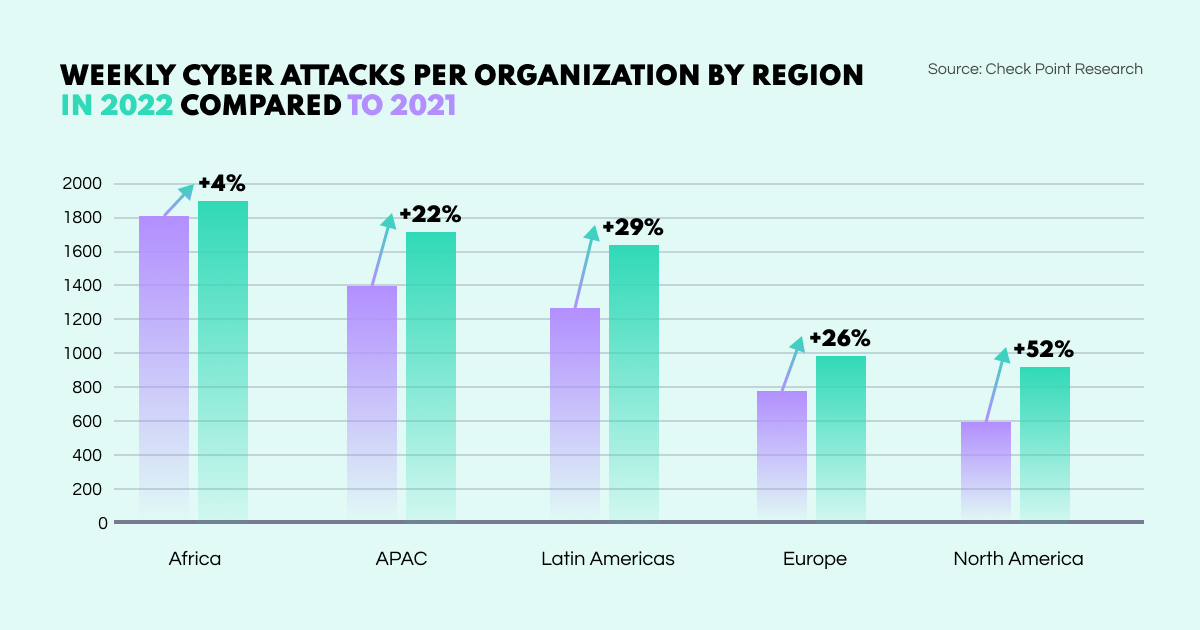

Driven by a rise in smaller gangs and ransomware groups, weekly cyberattacks per organization in 2022 saw an increase of 38% compared to 2021. Primarily focusing on Education/Research, Government, and Healthcare institutions, AI systems such as ChatGPT could escalate these numbers to alarming rates in 2023.

The Federal Reserve and other financial institutions have outlined the key risks for the banking sector regarding the usage of cryptocurrencies. Here they are in a nutshell:

IT security company Netwrix has outlined key trends to follow in 2023. Among them, the professionalization of cybercrime, more sophisticated supply chain attacks, and the bigger role of channel partners amid understaffing are of note. Despite increasing automation, the human factor remains the primary security concern. With more and more investment pouring into security tool development, many companies are looking to consolidate services with their vendors of choice.

As a next step in Brexit, UK officials are going to replace the EU’s General Data Protection Regulation (GDPR) with their own national data protection policy called the “Data Protection and Digital Information Bill.” However, many experts doubt the effectiveness of the change, as data providers and processors will face more difficulties working internationally.

The Chinese government is introducing new regulations that will require deep fake images to be flagged as “augmented” to combat potential scams and confusion. Starting January 10, the new rules are geared towards protecting national security and China’s core social values, as well as the rights and interests of citizens and organizations, government agencies reported.

In the last days of 2022, Slack had to publicly admit a security issue with its system, which had led to the exposure of the external GitHub repository and some of the corporate code. While no customer data was leaked or accessed by any malicious entities, Slack stated that they are working with their vendors on credential rotation to strengthen their security.

And that’s the end of the January digest! Stay informed on all things OSINT through our blog, where we publish the latest news and insights so you can stay up to date. Keep an eye out for the next edition.