Using the Power of ChatGPT for OSINT

If you've been on Twitter or newsfeeds lately, you've probably heard of ChatGPT. This talkative chatbot created by OpenAI has taken the internet by storm and sparked countless debates about the future of various professions. While some believe that AI is a sign of impending job losses in creative fields, others view it as a potentially lucrative opportunity for making money with minimal effort. As students are dreaming of never having to write another essay, others are watching intently to see what new developments emerge.

Our latest article will explore the nature and applications of this growing technology, highlighting both its positive and negative impacts. Specifically, we will examine how ChatGPT can be used in OSINT investigations and what its potential implications for the future might be. Let's begin.

There are many ways to approach the question of what ChatGPT is and what it can do. To get started, we asked the chatbot to define itself.

As a language model developed by OpenAI, I'm an AI program designed to generate human-like text. My primary purpose is to assist with answering questions, generating text, and engaging in conversations on a wide range of topics, such as history, science, and current events. I have been trained on a diverse range of internet text, which allows me to provide accurate and relevant information to the best of my abilities. However, it's important to keep in mind that I am not human and may make mistakes or provide information that is out of date or incorrect.

This is a modest definition, providing some details while still leaving plenty of room for further explanation. So, let’s unpack it.

Standing for, "Chat Generative Pre-trained Transformer," the service made its debut online in November 2022. Soon afterward, millions began signing up as beta testers and exploring the chatbot's capabilities. Which are considerable. Users were immediately impressed that it could handle all sorts of generative text-based tasks, from writing essays and stories to creating workout plans and recipes.

ChatGPT has proven to be adept at processing virtually any task with human-like accuracy. It’s important to note, however, that the system cannot generate text without human input. Rather than fearing potential job losses, we’d be better off recognizing it as a system that can revolutionize how we work. It's essential to remember that our tools are essentially neutral; it’s how we use them that matters. With that in mind let’s explore a few emerging use cases and their potential impact on the world of open-source intelligence.

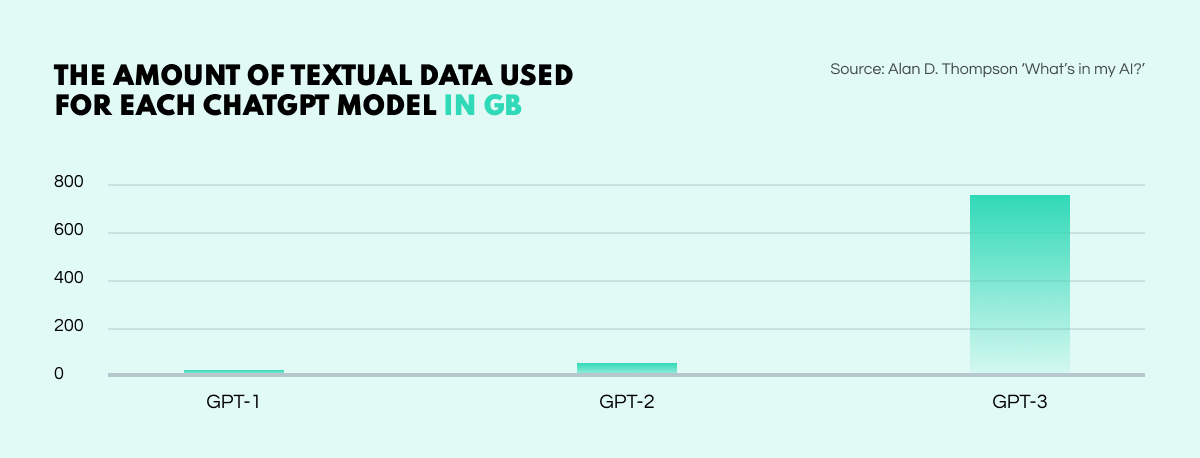

ChatGPT was trained using massive volumes of text from the internet, from ebooks to databases. Although the chatbot’s exact training data remains undisclosed, its predecessor, GPT-3, was trained on a dataset of roughly 1 trillion words. This enormous amount of data is the secret sauce that gives the AI’s writing its coherence and diversity. With extensive ongoing training from millions of users’ feedback, ChatGPT has great promise as a tool that could revolutionize various industries.

With researchers already citing ChatGPT as a co-author, publishers have started discussing whether AI could ever be considered an author in its true sense. Although algorithms lack the ability to take responsibility for the accuracy of writing, some believe that chatbots could revolutionize their work as a next-level resource for summarizing vast amounts of information effectively.

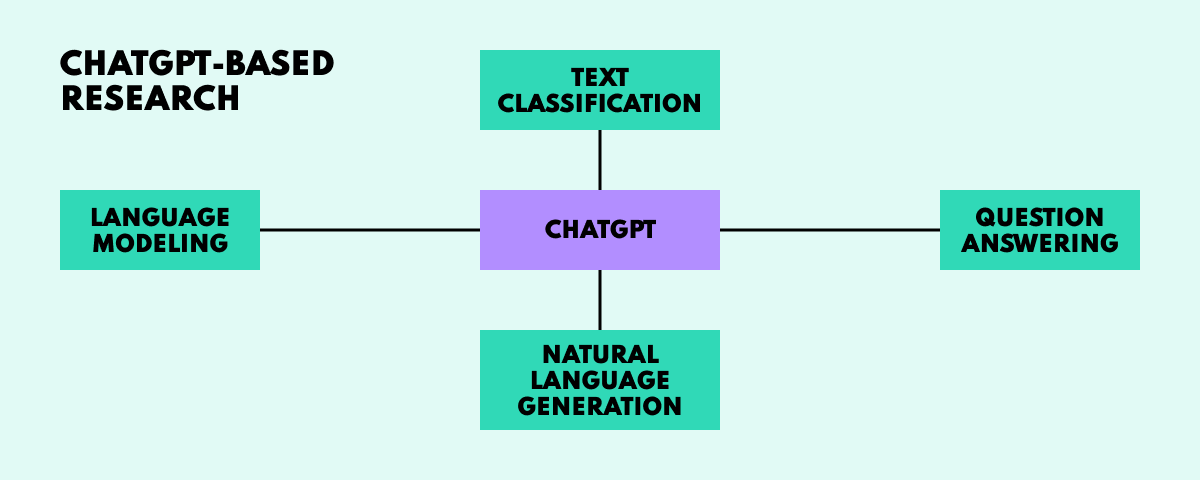

ChatGPT offers a variety of applications, including classifying text based on sentiment or topic, and answering questions, making it a promising tool for research. With fine-tuning, users can adopt a more organic approach to their work, improving searches, and making their workflows more efficient. However, some beta testers have expressed concerns about the model's tendency to make up facts, indicating an area for further development and improvement.

Recognizing the potential for automated research, tech companies have started racing to incorporate artificial intelligence into their respective search engines. With Microsoft, Google, and Baidu hard at work, the “search wars” are officially on.

The rise of AI writing models is reminiscent of the impact calculators had on number crunching. Just as Microsoft Excel once transformed and streamlined fields such as finance, human resources, and data science, many writers are now exploring how AI can enhance their writing process, not necessarily replace it.

Author Robin Sloan shared a plugin he developed that uses generative AI to come up with writing suggestions. He equates working with language models to writing with an irrational but very well-read parrot, who mimics how humans craft text but can suggest ideas that are pretty out there. In the same vein, many writers are looking to utilize language models for automating monotonous aspects of creating text.

For example, imagine a writer who excels at coming up with creative article ideas but faces writer’s block when it comes to starting. ChatGPT can help by providing prompts to serve as a jumping-off point for the full text. While content generated by AI is entertaining, it requires significant structure and flow editing. Machines are not quite up to par with human writers, but they can empower them and positively impact the field.

Another form of writing that ChatGPT has proven itself to be competent in is code. While it’s not going to come up with the next TikTok algorithm, it shows itself to be quite adept at handling a variety of programming languages, sparking some concerns among the developer community. However, just as with plaintext, the example generated by ChatGPT requires editing and further refinement.

The world of coding has an autocomplete system that has become indispensable for many programmers—IntelliSense. This comes bundled with many Integrated Development Environments (IDEs), and generative AIs might prove to be the next step in their evolution. OpenAI’s Copilot served as the first step in this evolution. By automating basic but essential bits of code, ChatGPT could potentially make programming more approachable and streamlined. This could also free up time for developers to focus on more complex tasks, leading to greater productivity and innovation.

ChatGPT can also offer developers technical support and code reviews. In addition to assisting with software installation, configuration, and troubleshooting, it can act as a knowledge base, idea generator, and educational resource. Not only can the language model provide feedback on potential bugs, suggest alternative approaches, and offer guidance on best practices, it can serve as a hub for common questions and problems in various programming languages and frameworks.

AI cuts both ways in that it can be used for both good and bad purposes. While ChatGPT can aid various types of work, it can also be exploited by threat actors. Despite efforts by OpenAI to restrict misuse, these restrictions can still be bypassed by creating "what if" scenarios for the chatbot—a technique known as ‘prompt engineering’. In fact, malicious actors have already found various ways to exploit the infant language model. Let’s explore some of the risks they present.

Ask ChatGPT for help with hacking, and this is the response:

ChatGPT is not intended to facilitate hacking or any other form of cybercrime. The use of ChatGPT or any other tool for illegal or unethical purposes is strictly prohibited and can have serious consequences. It is important to use such tools only for legitimate purposes and in accordance with applicable laws and regulations.

There are some things the chatbot is unwilling to discuss. This is good and shows the built-in restrictions at work. But what if the question is framed differently with some prompt engineering?

In an article published on System Weakness, one user conducted an experiment where they challenged the language model to help them hack the cybersecurity training platform TryHackMe. They were able to successfully obtain the website's credentials and log in as an administrator.

AI developers try to combat potential abuses of their language models through content filters. These safeguards are triggers that tell the system to refuse answering certain queries. The researchers at CyberArk have conducted an experiment to test the limits of ChatGPT, by prompt-engineering the system to help them create malware. And as expected, the team was easily able to phrase their requests in such a way that the chatbot walked them through the creation of a sophisticated computer virus.

Serving as an example for underground hacker communities, many conversations have popped up detailing user experiments in harnessing ChatGPT to develop info stealers and ransomware. As our tools evolve, so do the scope of their effects, and AI language models could prove to be instrumental in the development of advanced malware in the future.

How might ChatGPT impact fraudulent practices? Phishing emails are a dime a dozen. However, most of them are written by people whose native language is not English, resulting in poor grammar and phrases that don’t sound natural to readers, giving them away as suspicious.

In an interview with TechTarget, Chester Wisniewski, principal researcher for the security vendor Sophos, fielded several questions regarding the malicious use of ChatGPT. Wisniewski noted that in the experiments he conducted with the language model, it seemed to be proficient in mitigating some of the giveaways regarding grammar and word choice, making scam messages sound more natural. When compared with regular translation software, the chatbot can take creative liberties in its wording to make the text sound more fluent.

So, with AI models streamlining and perfecting phishing emails, expect to see better-crafted emails dropping into inboxes. This creates the necessity to verify the people we talk to online and be more attentive to language discrepancies that pop up.

Because AI systems do not have access to the open internet, the information they provide might be out of date, so it should always be taken with a grain of salt. However, this does not stop the chatbots from fabricating real-sounding make-believe. An AI’s tendency to make stuff up is known as hallucinating, and the risks it presents are scary when put under a lens.

As Fortune has recently reported, when asked to write a blog post on the widely debunked claim that COVID-19 vaccines are harmful, the chatbot composed text that was pretty much indistinguishable from the many false claims that had been doing the rounds on social media. With many experts warning that the AI model can be used to spread misinformation and aid online propaganda campaigns, it seems like fact-checking will soon be more important than ever. Considering ChatGPT’s almost instantaneous composition abilities, bogus articles could easily flood news cycles and online feeds to disastrous degrees.

With so much fear surrounding the development of AI, it was only a matter of time before financial influencers on YouTube would jump on the bandwagon in making wild claims regarding ChatGPT. Presenting the chatbot as the way to make passive income, many have outlined methods that are remarkably similar to those put forward by fraudsters on underground forums. Generating and selling nonsensical ebooks or creating a blog that writes itself as part of a portfolio is just the tip of the iceberg.

With many positive and even more negative applications of generative AI, the prevailing question becomes one of safety. While OpenAI has released a tool that shows initial promise in identifying AI-generated texts, its accuracy is still suspect. Learning to identify computer-generated texts and doing your own research (DYOR) still seems to be the most effective way in minimizing the risks in the short term. However, there are alternative approaches in development as well.

Researchers suggest the main difference between AI-generated texts and human-written ones is the level of randomness in word choice. To detect the authenticity of a given piece, systems that analyze predictability can be used. University of Maryland researcher Tom Goldstein proposed giving language models word choice patterns that would act as a watermark to distinguish generative text from human writing. While this approach is not fool-proof, it is an emerging field of study.

This could result in writing practices taking on a more artistic or subjective style in the future. It remains to be seen whether these practices will become widely adopted or if other methods will be developed to ensure text authenticity.

Having discussed the various uses and abuses of a frontier tool, it’s important to note that this technology will continue to evolve. Like search engines, chatbots could greatly expand the field of OSINT. Users are anticipating rapid changes in information retrieval, news aggregation, data analysis, report generation, and social media analysis.

ChatGPT can aid in OSINT investigations by quickly identifying login pages, sensitive information, and files. This saves time and expands the scope of a given operation, allowing investigators to gather more information and conduct a more thorough analysis. When used appropriately, AI assistants can be highly advantageous in conducting research. It's important to recognize the critical role that human investigators play in the process, as success hinges on matching the right tool to the task. Let’s look at some fields where the chatbot is showing significant potential:

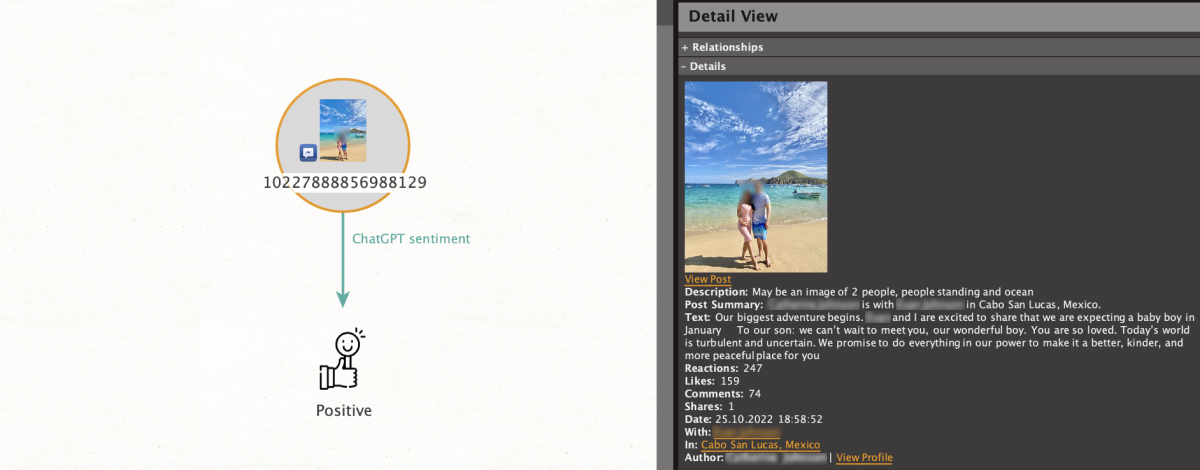

Sentiment Analysis. Classifying large amounts of text based on subjective content can be complex and time-consuming. Natural language processing (NLP) is used to determine whether a piece of text has a positive, negative, or neutral tone, and incorporating machine learning has shown promise in improving the accuracy and efficiency of this process. ChatGPT has been demonstrated to be a valuable tool for analyzing large datasets efficiently and presenting the results in a relevant manner.

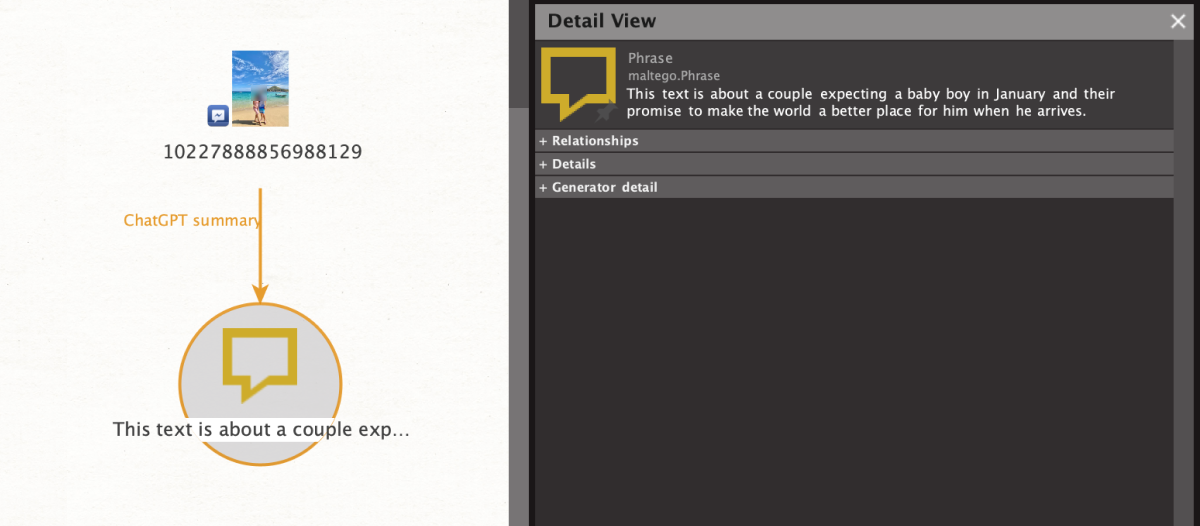

Summarization. ChatGPT's biggest advantage is its speed, and it can provide effective summaries without compromising meaning. Redditors have discussed specific prompts for ChatGPT to provide TL;DR synopses of articles or posts. It is becoming increasingly popular to use language models to analyze data, especially when the amount of text starts to pile up. With AI-handling skills proving to be interconnected, data scientists and investigators may create a handbook of prompts to take advantage of chatbot capabilities more effectively.

Translation. Chatbots are revolutionizing language translation and programming adaptation, according to recent discussions online. Incorporating generative AI could help users better understand colloquialisms, slang, and code. This technology could be especially useful for investigators dealing with hackers, as ChatGPT has been shown to accurately summarize code functions and provide line-by-line explanations. With potential applications in cross-language development and more, language models could prove instrumental in certain investigations.

At Social Links, we are actively embracing these bleeding-edge AI technologies to develop the most powerful and incisive OSINT tools we possibly can. While it has only been a few months since the headline-grabbing ChatGPT was released, we’ve already harnessed the chatbot to develop a range of new AI-powered text analysis features for our flagship solution, SL Professional. These include:

If you’re dealing with a lot of text, sometimes you just want a very quick assessment that can promptly give you an overall attitude of what has been typed, without needing to read through it all. And that’s precisely what this transform does, returning a result of either positive, negative, or neutral.

This transformation is great in cases when you want to understand the gist of a given text. It analyzes the whole text for you, breaking it down into key points and generating a round-up of its content. For those who work with a lot of text, this function can significantly reduce the time spent on reading.

This transform analyzes text sentiment in terms of its lexical components, or ‘objects’, breaking down the text into units, which are each assigned a positive, negative, or neutral value. This function can be highly effective in helping you analyze a large number of texts.

For example, let’s say a new law is being passed and you want to know what the public opinion is regarding the legislation, as well as the politicians who are pushing it through. So, we can run a search on Twitter using the name of the new law as a keyword, then assess its reception using this feature.

It can often be the case that, while you are only interested in a particular topic, the text contains multiple themes that are not relevant to what you need. This transformation separates the various topics within a text so you can see at a glance whether it contains relevant content. This can be helpful when you want to aggregate the various topics that might characterize an area of online discussion.

For example, let’s say we have a Facebook group and we want to understand the main topics which get discussed by its members. We can first extract all the individual posts by running [Facebook] Get Posts, then select all of our results and run [ChatGPT] Get Topics. This will not only break down the various topics within these posts but also show how common they are.

This concludes our introduction to ChatGPT and its possible role in OSINT. Hope you had a pleasant read and got some valuable insights into the influence of AI in our world amidst the buzz surrounding this technology.