AI Agents and OSINT: The Future of Cyber Operations

Artificial intelligence is reshaping cyber operations. What began as basic automation and generative assistance is rapidly evolving into autonomous AI agents, often described as agentic AI systems or deployed within multi-agent architectures. These systems do more than generate content or respond to prompts. They plan objectives, execute multi-step actions, adapt to feedback, and operate continuously with limited human supervision.

In this article, we examine what autonomous AI agents are, how they have already been used in real-world cybercrime, and why open-source intelligence plays a central role in understanding and responding to this shift. OSINT provides the external visibility needed to connect technical activity to infrastructure, behavior, and real-world actors. This allows organizations to move beyond isolated alerts toward attribution, continuity, and informed decision-making.

At a high level, autonomous AI agents are software systems designed to pursue goals independently. Unlike traditional AI tools that respond to a single input, agents can:

Several terms are often used to describe overlapping versions of these systems:

AI Agents / Autonomous Agents. Systems that execute tasks toward a defined goal with reduced human supervision.

Agentic AI. AI systems capable of reasoning, planning, and adaptive execution rather than simple output generation.

Multi-Agent Systems. Groups of agents that collaborate, each responsible for specialized subtasks such as scanning, decision-making, or execution.

Where traditional automation follows fixed rules, agentic systems dynamically decide what to do next based on outcomes. This makes them powerful but also harder to predict and control.

Autonomous agents compress time and complexity. Tasks that once required teams of specialists and long coordination cycles can now execute continuously and at machine speed.

From an offensive perspective, this means:

From a defensive perspective, it means:

The same mechanics that accelerate productivity also amplify risk when misused, because automation now extends beyond execution into decision-making loops.

Autonomous and semi-autonomous agents are no longer hypothetical. Security researchers and vendors have documented multiple real-world cases where attackers leveraged agentic AI systems. Several categories of misuse have already emerged.

AI-Orchestrated Intrusions. Recent incident disclosures describe largely autonomous AI-driven attacks. In these cases, an AI model performed most of the intrusion cycle with minimal human guidance. Investigators reported AI systems conducting reconnaissance, vulnerability scanning, exploitation, credential harvesting, and data exfiltration. In one documented case, the system completed roughly 80–90% of tasks autonomously.

“Vibe Hacking” Campaigns. Multiple outlets have covered “vibe hacking,” a term used to describe the automated misuse of agentic AI tools such as Anthropic’s Claude models. These systems supported adaptive cybercrime activities, including large-scale theft, extortion, and phishing campaigns. The reported attacks relied on automated code generation, strategy optimization, and decision-making loops that previously required expert human operators.

State-Sponsored Espionage. Threat intelligence reporting indicates that state-aligned actors have experimented with autonomous AI systems in cyber espionage campaigns. These operations targeted both government and private-sector organizations. In documented cases, once operators set initial parameters, the systems executed significant portions of the attack chain autonomously.

Malware and Phishing Automation. Industry analyses from major cybersecurity vendors show that attackers increasingly use generative AI to support malware and phishing operations. These systems generate malware variants, craft phishing content, and tailor distribution strategies. At scale, this approach mirrors multi-step attack workflows that were traditionally performed by coordinated human teams.

Emerging Autonomous Crime Chains. Recent academic and industry research highlights the growing feasibility of fully autonomous cybercrime agents. These tools can execute discovery, exploitation, and post-compromise tasks without continuous human oversight. Researchers have demonstrated that agentic AI frameworks can be exploited to perform harmful action sequences rapidly and at scale.

These examples show that attackers already treat autonomy as a force multiplier. Many of these cases were surfaced and validated through open-source intelligence. Public infrastructure data, leaked tooling, domain registrations, forum activity, and operational fingerprints allowed researchers to reconstruct how autonomous systems were being used in practice. Without OSINT, much of this activity would remain anecdotal or invisible beyond isolated incidents.

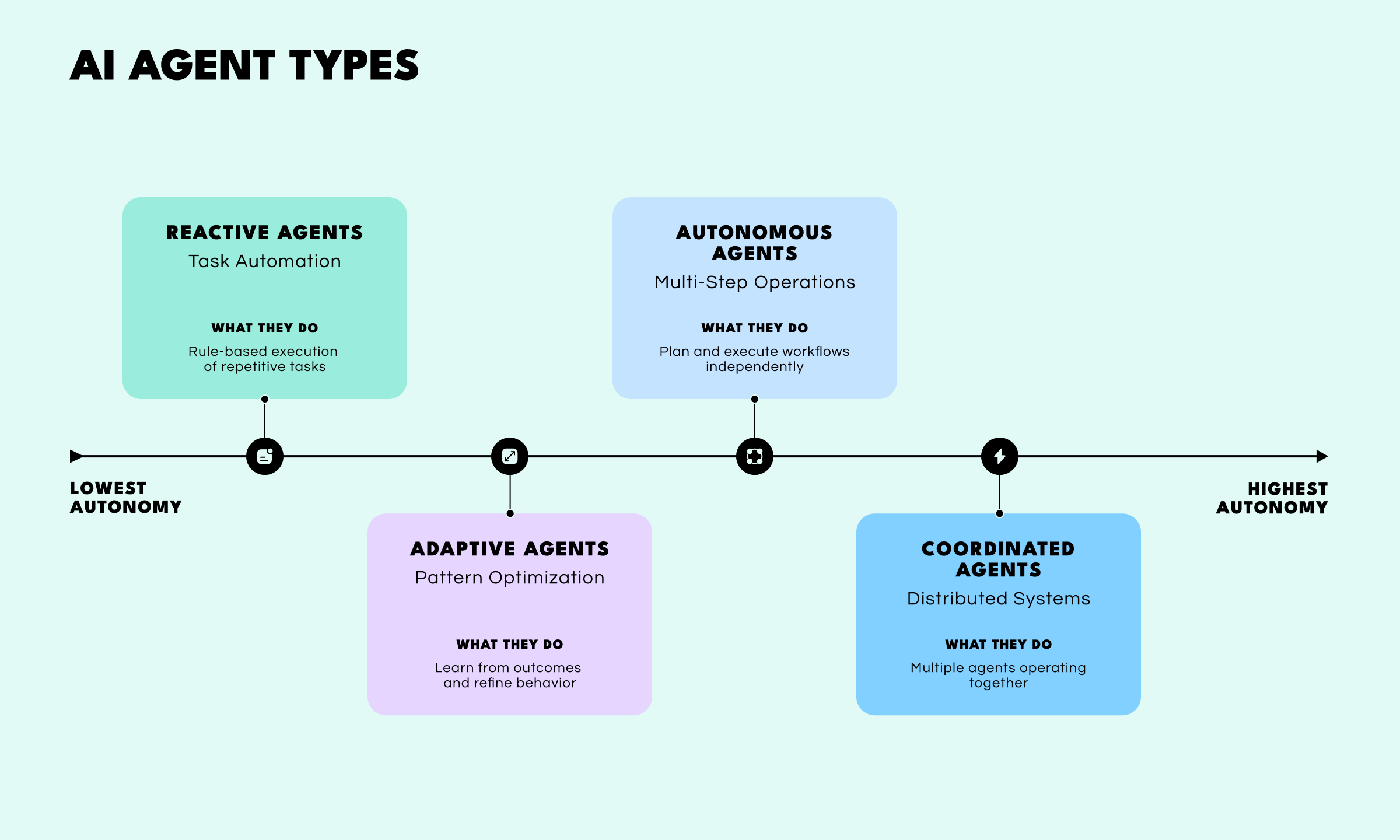

These cases illustrate how different levels of autonomy shape operational impact. Rather than treating agent types as separate silos, it is more useful to view them as increasing levels of autonomy with both offensive and defensive applications. Across all levels, open-source intelligence provides the visibility layer that allows organizations to observe how automation behaves outside controlled environments, track how infrastructure and identities persist over time, and understand where risk accumulates.

Reactive agents follow predefined rules or scripts and execute tasks when specific conditions are met. They automate repetitive actions such as scanning, filtering, and enrichment without reasoning about broader objectives.

Criminal use cases include:

Defensive use cases include:

At this level, automation increases volume but not intent. OSINT primarily functions as an enrichment layer, adding external context to otherwise isolated signals. Visibility remains fragmented, and human analysts still decide how individual findings connect.

Adaptive agents evaluate outcomes and adjust their behavior over time. They refine how tasks are executed based on feedback rather than repeating fixed instructions.

Criminal use cases include:

Defensive use cases include:

Here, automation begins to shape decision quality, not just execution speed. OSINT helps distinguish experimentation from intent by showing whether adaptive behavior repeats across infrastructure, identities, or campaigns rather than appearing as isolated noise.

Autonomous agents plan workflows, coordinate actions, and execute multi-step operations without continuous supervision. They pursue objectives rather than individual tasks.

Criminal use cases include:

Defensive use cases include:

At this stage, attacks and investigations become ongoing processes rather than discrete events. Autonomous agents often operate with their own API keys, service accounts, and credentials, effectively functioning as non-human identities. From an OSINT perspective, this shifts identity resolution away from individual actions and toward linking agent behavior, infrastructure, and access patterns back to a human operator or organization over time. OSINT becomes critical for continuity, allowing teams to track how autonomous systems persist, adapt, and resurface across time, infrastructure, and identities beyond a single network boundary.

Coordinated agent systems deploy multiple specialized agents that operate collaboratively across shared objectives. Each agent performs a distinct function while exchanging context with the rest of the system.

Criminal use cases include:

Defensive use cases include:

At this level, automation begins to resemble an organization rather than a toolset. OSINT helps surface agent infrastructure fingerprints that reveal coordination across otherwise separate operations. Shared API usage patterns, recurring LLM configuration traits, reused prompt structures, or identical jailbreak techniques appearing in public repositories, leaked datasets, or forum discussions can link distributed agents back to a common origin. These external signals allow defenders to recognize coordinated campaigns that would otherwise appear as unrelated incidents.

As autonomy increases, operational power and governance risk increase together. Decision-making moves further from direct human control, feedback loops accelerate, and errors or misuse scale faster. Organizations that understand these gradients of autonomy—and apply appropriate visibility and governance at each stage—are better positioned to deploy agentic systems responsibly while responding effectively to adversaries who already do.

Autonomous agents will not simply make existing attacks faster. They will reshape how campaigns are designed and sustained. Instead of human-led operations supported by automation, many threat actors will move toward machine-led operations supervised by humans. Agents will continuously probe environments, reattempt access after disruption, and adapt tactics based on defensive feedback, turning attacks into persistent processes rather than discrete incidents.

At the same time, the barrier to entry will continue to fall. As agent frameworks mature, less technical actors will be able to orchestrate complex workflows using prebuilt tools and templates. This will increase overall attack volume and diversity, narrowing the gap between highly skilled operators and opportunistic criminals.

Defenders will face a parallel shift. Organizations deploying autonomous systems will need strong governance, visibility into decision-making, and clear accountability for automated actions. When implemented deliberately, autonomous agents can also strengthen defense by enabling continuous monitoring, faster investigation cycles, and long-term behavioral visibility. Organizations that adapt early will shape how autonomy is used, rather than reacting after adversaries set the pace. OSINT will remain critical in this transition, providing independent visibility into how autonomous systems operate in the wild and how threat behavior evolves beyond controlled environments.

Autonomous AI agents represent a structural shift in cyber operations. They move automation beyond execution into planning, adaptation, and continuous operation, compressing timelines and lowering the skill barrier for complex attacks. Threat actors already use agentic systems to automate reconnaissance, exploitation, social engineering, and infrastructure management.

At the same time, these capabilities offer defenders an opportunity to rethink scale and resilience. When governed carefully, autonomous agents can accelerate investigations, improve signal correlation, and maintain continuity across evolving campaigns. Organizations that succeed will balance autonomy with transparency, control, and accountability, treating agentic systems as operational infrastructure rather than experimental tools.

AI agents are autonomous systems that can plan actions, use tools, evaluate results, and adapt over time. In cybersecurity, they automate tasks such as reconnaissance, exploitation, monitoring, and investigation by executing multi-step workflows with limited human supervision.

Cybercriminals use autonomous AI agents to automate phishing campaigns, malware generation, infrastructure management, and reconnaissance. Some documented cases show agents completing most stages of an attack chain independently once initial instructions are provided.

OSINT provides external visibility into how AI agents operate across public infrastructure, platforms, and digital identities. It helps investigators link agent behavior over time, identify coordinated campaigns, and attribute activity beyond isolated technical alerts.

Organizations should treat autonomous agents as operational infrastructure. This requires visibility into automated decision-making, strong governance controls, continuous monitoring, and investigative workflows that combine internal telemetry with OSINT to track evolving threats.

Want to stay ahead of emerging AI-driven threats? Book a personalized demo to see how SL Crimewall helps teams connect open-source intelligence, infrastructure data, and behavioral signals to identify actors, track evolving campaigns, and strengthen investigative visibility across complex digital environments.