Fighting AI-Automated Crime: The New Defensive Paradigm

In 2024, a finance employee in Hong Kong transferred $25M after a video call with a deepfake CFO. The attacker? An AI agent trained to impersonate, manipulate, and execute. This wasn’t some anomaly; it was a glimpse into the future—a world where autonomous AI agents are weaponized for cybercrime, and where businesses must deploy equally intelligent defenses to survive.

In 2024 alone, about 4.3M machines were infected globally by infostealers, resulting in over 330M compromised credentials. How did it come to this?

The first wave of AI-powered cybercrime didn’t reinvent the wheel—it simply made it spin faster.

These attacks are cheap, scalable, and nearly indistinguishable from legitimate forms of communication. Yet the damage caused by such attacks could still have been minimized through employee training and the implementation of digital hygiene policies. However, human-led defenses—even well-trained ones—quickly proved inadequate against agents that could learn, adapt, and strike autonomously.

Cybercrime has entered a new phase. What began with simple automated tools has evolved into something far more sophisticated—autonomous AI systems that can learn from their environment, adapt their tactics, and carry out attacks with minimal human oversight.

These agents don’t just steal—they collaborate, evolve, and scale. We began monitoring them more closely and training defensive models to counter malicious agents. From that moment on, the battle between viruses and antiviruses entered an entirely new phase.

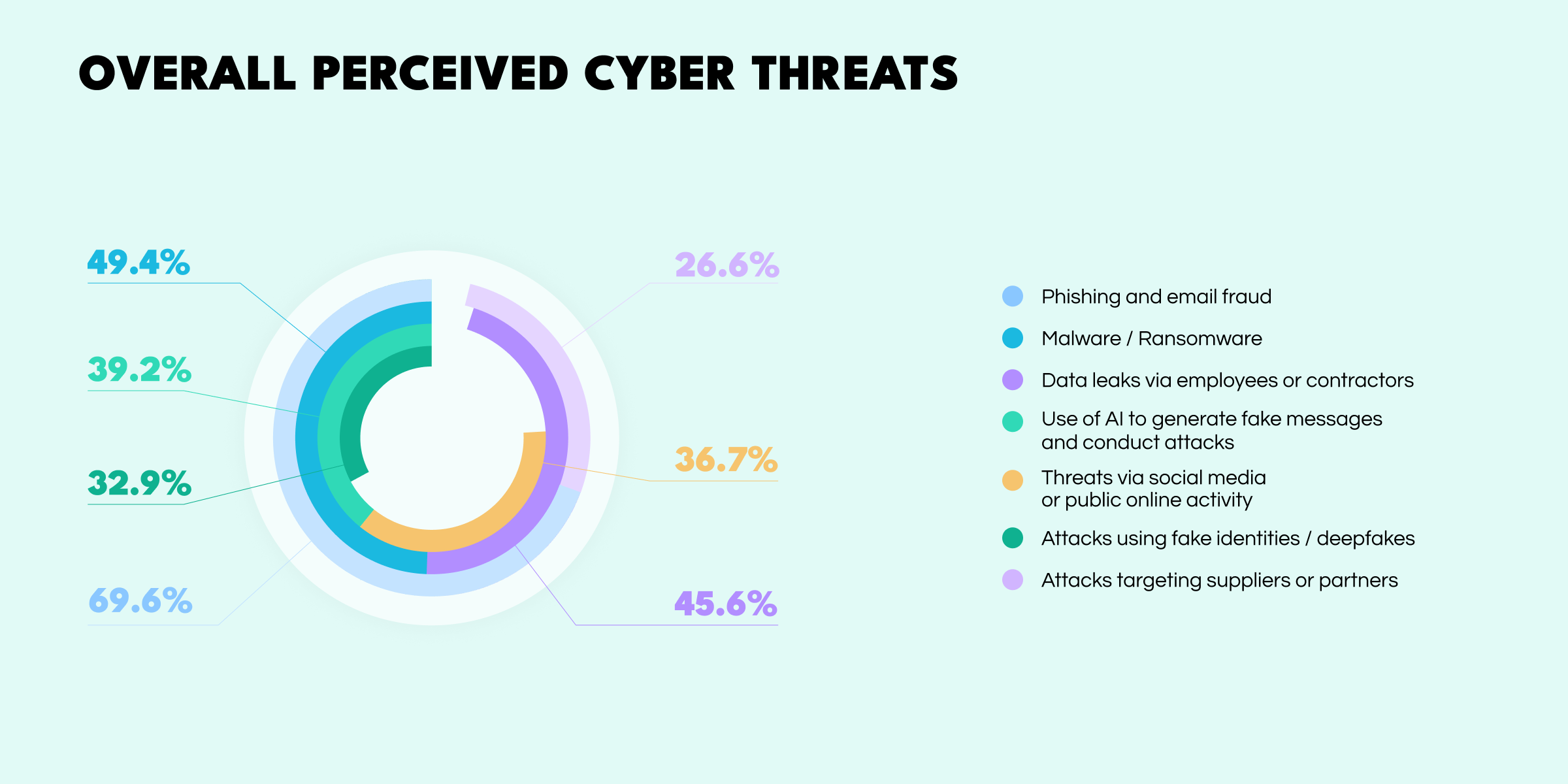

This shift from simple tools to autonomous agents is no longer theoretical—recent data shows how quickly these threats are spreading. According to a 2025 Social links report Social Links Report, the scale and diversity of risks now facing businesses have triggered concern across departments and leadership tiers. The survey identifies several types of risks reported by respondents:

These numbers don’t just reveal technical vulnerabilities, they signal a deeper erosion of trust—not just between employees, but also the systems and tools they rely on.

Further Defensive AI is a guardian of the digital economy. It is the mechanism by which we can distinguish trustworthy agents from hostile ones—and neutralize the latter before harm is done.

As AI-powered threats grow more sophisticated, our response must be equally intelligent, adaptive, and relentless. Just as every virus demands an antivirus, every malicious algorithm must be met with a protective one. In this new digital arms race, we must engineer forms of AI that are defensive—and not just passively, but proactively.

This is not about building another firewall. It’s about creating an intelligence that studies criminal behavior, anticipates emerging tactics, and evolves in real time—a white-hat AI creation that hunts threats, learns from adversaries, and stays ahead of the curve.

What’s more, these AI tools must be principled, accountable, and transparent. They should be designed not only by engineers, but also by experts in cybersecurity, law, and behavioral science. Their mission: to protect systems, data and people—without compromising ethics.

Governments may regulate the industries, but it is businesses that must act. Enterprises are the primary targets of AI-driven crime—and they must become the primary users of AI-driven defense. In the end, trust will not be restored by policy alone—it will be earned by intelligent systems that defend us, learn from us, and evolve with us.

Cybersecurity is no longer a technical silo—it’s a strategic priority. The winners won’t be the ones with the biggest budgets, but the ones with the smartest systems.

By Ivan Shkvarun, Forbes Councils Member, for Forbes Technology Council.

Ivan Shkvarun is the CEO and Co-founder of the data-driven investigation company Social Links (USA).