The OSINT Fact-Checking Toolkit

In the flood of online information, speed often wins over accuracy. Yet for investigators and analysts, rushing means risk. Fact-checking tools don’t replace human judgment—they sharpen it. The key is workflow: knowing how to verify information quickly without getting lost in tabs, speculation, or repetition.

This article breaks down a modern OSINT-based fact-checking process, showing how analysts verify claims, validate sources, and separate authentic digital evidence from manipulation. It blends newsroom verification discipline with open-source intelligence methods built for scale, speed, and reliability.

When new content appears—a viral claim, a leaked document, or a breaking video—the first step is a rapid triage. These checks prevent wasted hours chasing false leads:

Experienced teams often log this triage in reusable templates—recording claim, date, and result—creating an audit trail for every decision.

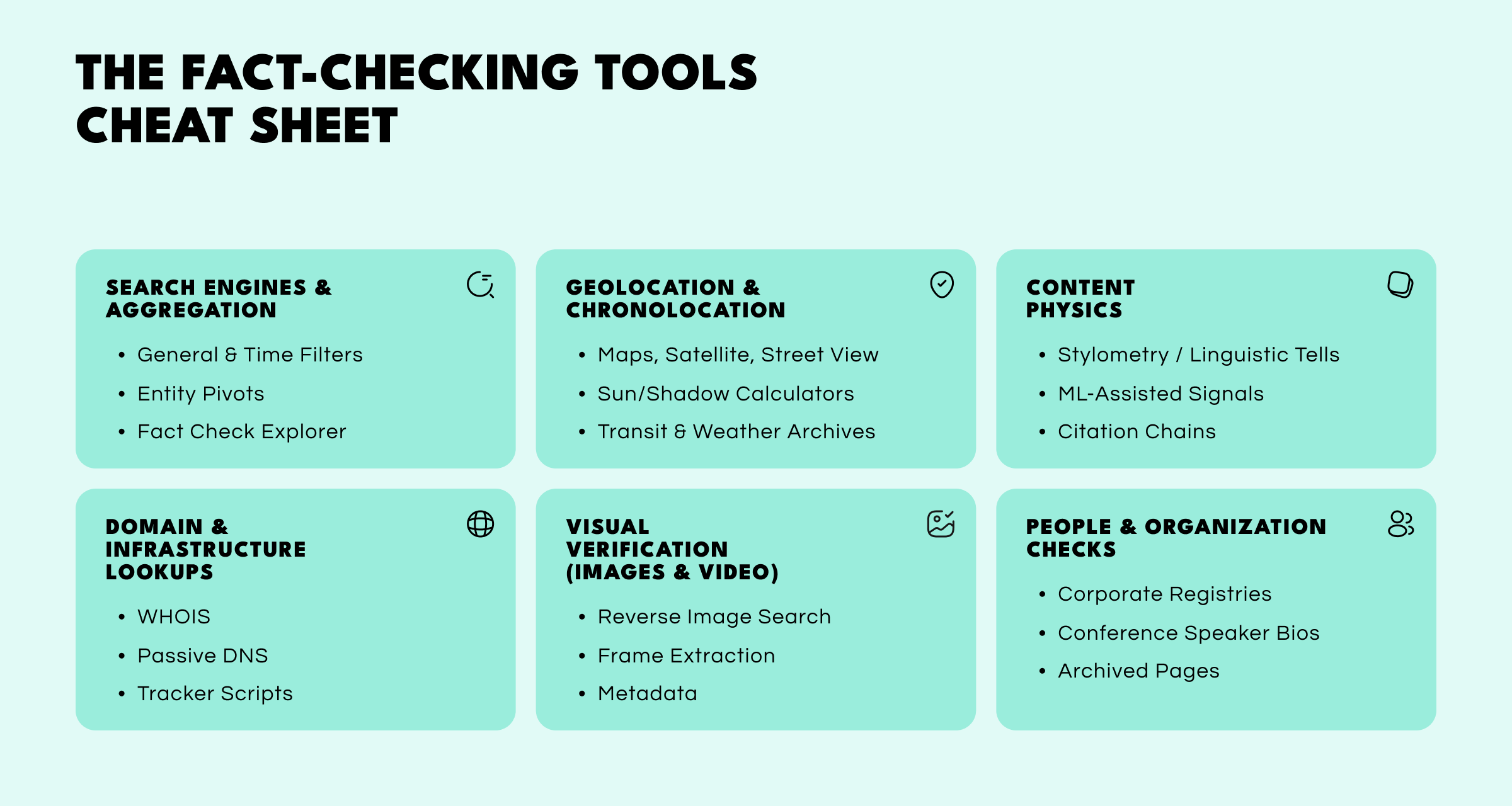

You don’t need a hundred bookmarks. A dozen fact-checking tools across these six categories cover most real-world cases. Fact-checking doesn’t require hundreds of tools, only a balanced toolkit that covers the main verification categories. Most professional workflows rely on six:

Search is still the fastest orientation method. Running multiple engines with time filters (past 24 hours or custom range) exposes reporting gaps and potential bias. Specialized resources like Google’s Fact Check Explorer show if a claim has already been reviewed by trusted outlets.

Use for: Breaking news or rumor verification.

Limitations: The inclusion of mainstream bias; niche regions often go under-reported.

A quick check of a site’s WHOIS record, DNS history, or analytics tags can reveal who runs it—or at least when it was created. Coordinated disinformation often relies on freshly registered domains with similar infrastructure patterns.

Use for: Suspicious websites or anonymous blogs.

Limitations: Privacy shields often conceal ownership; needs to be combined with other traces.

Images and videos spread faster than text, so visual verification is vital. Reverse image search reveals earlier appearances, while frame extraction from video identifies original uploads. Basic metadata and error-level analysis help detect editing or forgery.

Use for: viral posts and “breaking footage.”

Limit: lookalike content and stripped metadata.

Matching footage to its real-world setting is the gold standard of verification. Analysts compare terrain, skylines, signage, and even shadow angles to estimate time of day. Weather or traffic archives add further confirmation.

Use for: On-scene claims or event footage.

Limitations: Cityscapes evolve—make sure you cross-validate with recent imagery.

Fact-checking individuals or institutions means verifying identity. Company registries, court filings, and archived bios reveal inconsistencies or fake affiliations. A mismatched CV and LinkedIn profile is often the first red flag.

Use for: Insider quotes or expert commentary.

Limitations: AI-generated personas and fake experts are on the rise.

Language carries fingerprints. Stylometry and linguistic analysis identify text reuse, tone shifts, or automated writing. Citation tracing—following each link back to the first verifiable fact—exposes instances where multiple outlets rely on the same unverified post.

Use for: Polished articles and coordinated narratives.

Limitations: AI detection is probabilistic; results should be treated as clues, not verdicts.

Once the toolkit is ready, verification follows a clear sequence designed for repeatability:

Extract the precise statement and log timestamps: time of report, local time, and first publication. It’s best to distinguish between overlapping claims early on.

Trace who said it first, who amplified it, and who linked to it. Most misinformation chains collapse once their weak origin is found.

For visuals, run multi-engine reverse searches and compare frames. For text, audit metadata and style. For technical claims, validate indicators in a sandbox or test environment.

Public registries, local press, and municipal data can often act as ground truth. Official filings anchor OSINT verification when rumor outweighs fact.

Write a concise conclusion with a confidence level (low, medium, high) and document unresolved questions. Save screenshots and query logs to ensure transparency.

Claims of a “Fortune 500 company leak” begins circulating on X (Twitter). Reverse image search shows the same screenshot from 2022 including the same layout with the same data. Conclusion? This is clearly recycled content requiring a low confidence score.

Takeaway: Always locate the earliest verifiable appearance of a given rumor.

A clip claims to show a strike from “today”. Reverse video search finds that the footage was archived a year earlier. What’s more, the local weather then was rain, now it’s clear.

Takeaway: Chronolocation trumps instinctive plausibility and the confirmation bias.

Efficient fact-checking depends on shared systems, not individual brilliance. Effective teams maintain:

Curated tool collections. A shortlist of trusted utilities with usage notes.

Decision trees. Prebuilt “if-then” paths for recurring claim types.

Red-team drills. Simulated hoaxes to test accuracy under pressure.

Rotating skeptics. A designated analyst who challenges weak conclusions.

Debrief logs. Post-case reviews of what has worked in the past and what hasn’t.

Together, these structures create repeatable verification workflows that hold up to legal or public scrutiny.

A credible fact-check output includes:

Even an “uncertain” result is valuable when documented properly—because it proves rigor, not guesswork.

Every investigator balances competing forces:

Balancing these trade-offs is part of real investigative discipline. Every fact-check involves choices—when to stop digging, when to flag uncertainty, and when to trust a weak signal just enough to keep moving. The strongest analysts aren’t the fastest or most confident; they’re the ones who know where the edges of their certainty lie and document those limits before drawing conclusions.

Fact-checking in OSINT is about clarity, not complexity. A focused workflow, disciplined verification, and transparent documentation transform a flood of unverified claims into actionable intelligence. The best tools don’t replace human insight—they make it faster, cleaner, and more defensible.

Select one reliable option per category—search, archive, reverse image, domain lookup, and metadata. Test them against past cases to confirm consistency and speed.

Time-filtered searches, multi-engine image checks, and archive comparisons. These methods provide fast, defensible signals when full investigations aren’t possible.

By identifying the original post, tracing earliest uploads across platforms, and examining account history. Cross-check visuals with geolocation and external reports.

Both aim to confirm the truth, but OSINT leans on technical traces—domain data, metadata, infrastructure—while traditional journalism relies more on human sources.

We recommend maintaining a compact list: one claim-review aggregator, two strong regional outlets, and one domain-specific site (e.g., science, security). But treat them as references, not authorities.

Want to see how integrated OSINT tools can streamline fact-checking and verification? Book a personalized demo with one of our specialists and explore how Crimewall unifies fact-checking tools, sentiment analysis, and social graph intelligence, all within a single platform.